Evolving Government: Bureaucrats will still matter even after AI becomes commonplace

Part 6 of the Evolving Government series with Dcode42

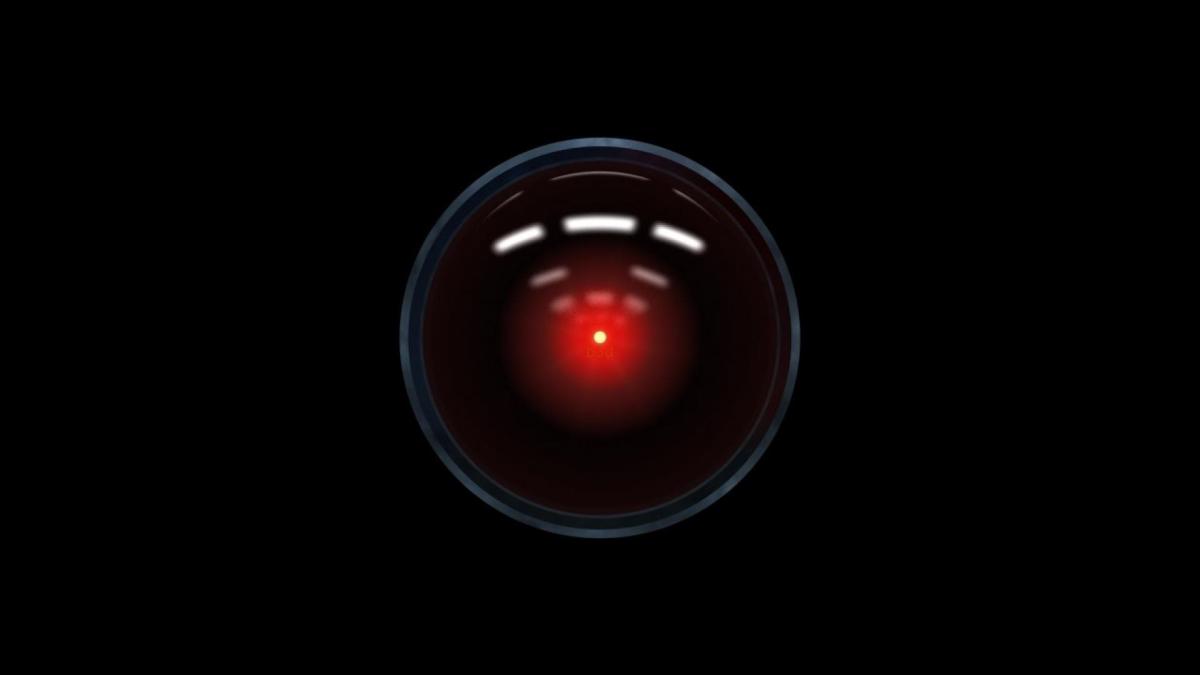

For years our perception of artificial intelligence has been shaped by friendly characters like Rosie the Robot introduced by “The Jetsons” and more menacing figures such as HAL in “2001: A Space Odyssey.” But while intelligent machines continue to entertain audiences, it is posing an ethical dilemma to technology adopters in both the public and private sectors.

AI has many exciting opportunities to free humans of mundane tasks, but when we remove the people from the process, we need to make sure we don’t leave their biases behind. Additionally, new standards in Europe, specifically the General Data Protection Regulation, may limit the amount of independence a machine or algorithm has to operate and learn on its own.

AI and machine learning are beginning to change the way we work and operate on a day-to-day basis. We communicate with online service chatbots and ask Siri or Alexa if we will make it to our meeting on time. These advancements will continue to make our interactions faster and more efficient, but many worry that AI has the potential to take jobs away from humans. Additionally, it remains to be seen how, when or if artificial intelligence will be able to inform decisions in a way that successfully removes bias.

“This is a question that the private sector and the federal government is grappling with every day as they move to adopt AI and ML,” says Dcode42 founder Meagan Metzger. “But what we have seen is that, if implemented effectively, AI actually frees humans to concentrate on more strategic and creative tasks that cannot be done by machines.”

Catalytic, a member of Dcode42’s AI and ML cohort, has focused its mission around this very idea of letting people be people and systems be systems. Its pushbot technology empowers organizations by automating mundane and repeatable tasks. The result is increased output, more accurate work and improved speed. Catalytic frees workers from the boring aspects of their job, shifting human resources from boring tasks to projects that require more complex thinking and decision making that machines cannot replace.

In addition to the concern about labor automation, civil rights and consumer advocacy organizations fear that ceding control to AI will compound racial and social biases held by humans. The critical first step toward training artificial intelligence and machine learning is feeding data into algorithms that machines then learn from to conduct their analysis. If data used in this process of “training” incorporates human error and biases, then AI processes might only serve to further entrench biases through more efficient processing power.

The potential for this to go horribly wrong is evinced by a Twitter bot experiment conducted by Microsoft last year. This test in “conversational understanding” intended to demonstrate that Microsoft’s Twitter bot Tay could learn to converse playfully via social media. Except that Tay was quickly corrupted by users tweeting racists and misogynistic comments. In just 24 hours, Tay became a parrot of these horrific ideologies. While Tay is a simple form of AI, and many other systems are far more sophisticated, it demonstrates an important lesson in the continued importance of monitoring data input and ongoing human supervision of AI and ML.

The Department of Homeland Security is facing exactly this challenge as it develops and tests the Automated Virtual Agent for Truth Assessments in Real-Time (AVATAR) program. AVATAR is designed to augment human screeners at border crossings. The

development and training of the AVATAR technology will require great care to ensure that it does not itself propagate human biases in border screenings. Methods of training used to avoid bias in human resources should be applied to the development of AI and ML algorithms.

This sort of advanced technology will continue to require supervision from humans to ensure that both the inputs and outputs of data analysis fit the requirements of a situation — such as flagging suspicious behavior — and do so in a way that we as a society are comfortable with. AI and ML are powerful tools to make our lives easier and our professional endeavors more efficient, but we are far from the day when machines will be able to make decisions for us or take over all of our jobs, particularly in public sector use cases that impact the safety and livelihoods of millions of citizens. Federal agencies can realize incredible efficiencies through the adoption of AI and ML but this adoption should be conducted with great care so we end closer to the Rosie the Robot side of AI. HAL need not apply.

Dcode42 is a government-focused emerging technology accelerator that is recently graduated a cohort of AI and ML companies. Dcode42 will be hosting a Discussion on AI and Government Regulation in San Francisco on August 15 with The Bridge and the U.S. Chamber of Commerce. Register Here.

Lydia Hackert is a Dcode42 associate.